Your product backlog has 287 items, and 43 are marked "high priority". Your roadmap hasn't shipped a feature customer actually asked for in four months. And the last prioritization meeting ended with three teams convinced their work is more relevant than everyone else's. That's a true framework problem.

Most product organizations rather drown in ideas than struggle with their development. And the backlog grows faster than the teams can ship. Priorities, therefore, need to shift with every new stakeholder meeting. And so do the frameworks teams rely on - MoScoW, ICE, value-effort matrices - and in the end, they either collapse at scale or devolve into political organizational chaos.

The result is predictable:

-

backlogs bloated with hundreds of half-prioritized features,

-

roadmaps that reflect who lobbied hardest rather than what delivers value, and

-

product teams spending more time defending decisions than building products.

Customer satisfaction stalls. Strategic initiatives get buried under "quick wins" that never end. And every quarter, leadership asks the same question: "Why aren't we moving faster?"

This guide cuts through the noise. It explains why backlog prioritization fails in complex organizations, what separates effective prioritization frameworks from theater, and how to implement a company-wide system that connects feature decisions to measurable business outcomes.

Why backlog prioritization breaks in complex product organizations

Backlog chaos isn't inevitable, as it is a symptom of frameworks that can't scale. The techniques that work for small teams - lightweight, intuitive, built for speed - collapse when organizations grow beyond a single product line. Understanding why this happens is the first step to fixing it.

What feature prioritization means at enterprise scale

Feature prioritization stops being a team sport the moment your organization runs multiple product lines. What worked for a single product team with sticky notes, quick votes, gut calls - will collapse under the weight of dependencies, shared resources, and conflicting business objectives.

At enterprise scale, prioritization becomes a negotiation across product managers, engineering leads, and executive stakeholders who rarely share the same definition of business value.

Most often, the problem lies in the prioritization technique, the discipline to apply, and not in the actual framework.

Most techniques were designed for small, autonomous teams. They assume clear ownership, stable priorities, and direct access to customer feedback. But in complex organizations, a single feature idea might touch four different teams, require architecture changes that impact three other roadmaps, and serve customer needs that conflict across market segments.

As the product development process becomes more complex, disciplined, and structured feature prioritization is essential to distinguish broken from working backlogs.

How bloated product backlogs damage customer satisfaction

A backlog with 300 feature ideas is a true indicator of a failure of prioritization. Many might think that it could be a sign of ambition, but bloated backlogs create negative impacts for many organizations. These include three kinds of damage.

-

They obscure what actually matters. When everything is tracked, nothing is truly prioritized. Product teams spend more time grooming the backlog than delivering features customers expect. To avoid this, it is essential to have a clear process to prioritize features, ensuring the team focuses on the most valuable work and prevents backlog overload.

-

They slow down decision-making. Every feature request gets added “for consideration,” which trains stakeholders to lobby harder rather than accept trade-offs. The backlog becomes a political artifact and not a strategic tool. Team members burn energy defending old ideas instead of exploring new ones.

-

They delay the features that would improve customer satisfaction. High-impact work gets stuck behind dozens of “should have” items that never quite get killed. Meanwhile, user feedback points to clear pain points like search functionality that fails, performance features that lag, and basic features that frustrate, but the development team is buried under technical debt and half-prioritized initiatives.

With the right framework, teams would overcome this problem. Therefore, mindsets need to shift from resource problems to a lack of a unified prioritization framework that connects feature scoring to actual business goals and customer values.

Why teams need a stronger prioritization framework

Most product teams know their current approach isn’t working. They’ve tried prioritization techniques that looked promising in consultancy reports but fell apart in practice. The issue isn't commitment or skill - it's weak frameworks that can't handle the reality of product management. Because the reality often looks more like shifting business objectives, incomplete data, and stakeholders who all believe their feature is critical.

Hence, a robust framework enables teams to prioritize product features based on strategic objectives and customer value.

How to evaluate product features with real evidence

What separates effective prioritization from theater is evidence, as strong frameworks force product managers to answer hard questions with data: What customer needs does this address? How does it map to business goals? What’s the effort required, and do we have confidence in that estimate?

Evidence-based evaluation starts with structured inputs: User stories that capture real pain points, customer feedback from support tickets, not just feature requests from the loudest voices, market trends that show where customer value is shifting, or technical feasibility assessments that include technical debt implications, not just green-field estimates.

The best product teams combine quantitative and qualitative signals as they track user satisfaction metrics alongside revenue impact. Opportunity scoring can also be used to assess feature importance by evaluating how significant each feature is to customers and measuring their satisfaction levels, helping teams prioritize what matters most.

Overall, it is about making informed decisions with the best information available and documenting assumptions to learn from what actually happens. Thus, it is not about perfect data but rather about weighing relative importance against resource availability.

What good prioritization techniques share in common

Good prioritization techniques usually follow three different characteristics:

-

Transparency. Team members understand why features rank where they do. Therefore, the criteria are explicit and when business objectives change, the framework adapts visibly, and everyone sees why priorities shift. It is important to review and update priorities regularly to ensure continued alignment with evolving business objectives.

-

Balancing multiple dimensions. No single factor should dominate and therefore make frameworks force teams to think in trade-offs. Therefore, models like for example the rice score or Kano model separate basic features from performance features and delighters.

-

Enabling team collaboration. Prioritization frameworks provide structure for disagreement, but instead of arguing whether feature A or B is “better”, teams evaluate both against the same criteria, surface their assumptions, and let the framework guide the decision.

Weak frameworks fail on at least one of these characteristics. They’re opaque, reductive, or so loose they enable politics over strategy. That’s why choosing the right prioritization framework matters more than most teams realize.

Identifying suitable techniques for feature prioritization

There are prioritization frameworks that fit better in some contexts than in others. Therefore, teams need to decide cautiously which technique will be used as the consequences can have severe impacts.

Imagine the following scenario: One technique works for a startup product owner, but it won’t survive cross-functional alignment in a 500-person R&D organization. Thus, it is important that the prioritization techniques match the team’s maturity, the complexity of a product backlog, and the types of decisions that are actually made. As a consequence, the product manager plays a key role in evaluating and implementing the most suitable prioritization technique for the team's specific context.

The limits of the MoSCoW method beyond team level

The MoSCow method is seductive because of its simplicity. “Must have”, “Should have”, “Could have”, and “Won’t have” form four categories for fast decisions and easy stakeholder communication (Exhibit 1).

In this context, 'should haves' are important requirements that are not essential for immediate success, 'could haves' represent optional or nice to have features, and 'nice to have' features add value but are not critical for the project's success. Hence, it works beautifully for single-team sprints where the scope is fixed, and the trade-offs are obvious.

Exhibit 1: The MoSCoW model

But it breaks at scale as it offers no mechanism for comparing features within a category. Imagine having 40 “must-haves”, and they are not prioritized. Consequently, the problem is only relabeled, and product managers might end up negotiating category placement instead of debating actual priority.

The framework also ignores multiple dimensions of value. It doesn’t capture effort, risk, or customer impact. A feature can be marked “Must have”, whereas it might not serve business value. And when business objectives change, the model provides no systematic re-evaluation. As a consequence, categories need to be reshuffled manually based on whoever has the strongest opinion.

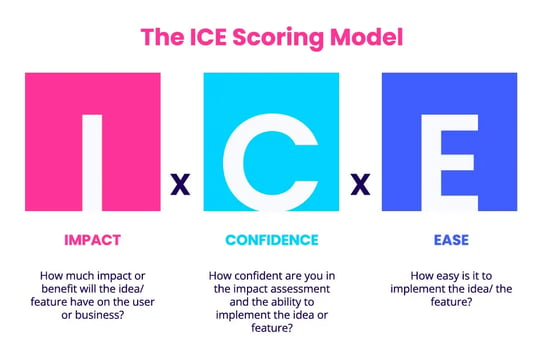

How the ICE scoring model supports rapid comparison

The ICE scoring model (Exhibit 2) solves MoSCoW's core weakness: it forces numerical comparison. Each feature gets scored on three dimensions, usually on a scale of 1 to 10. Multiply or average the scores, and you have a rank order. It's fast, lightweight, and particularly useful when you're drowning in feature requests and need to cut quickly.

-

Impact. Measures how much the feature moves key business goals.

-

Confidence. Reflects how certain someone is about their estimates.

-

Ease. Captures the work required from the development team, including design, engineering, and testing.

Exhibit 2: The ICE scoring model

Altogether, the three factors that surface which features deliver the most value for the least cost and risk. It works well for early-stage product prioritization or when comparing many potential features with limited information.

While ICE asks "how much impact will this have", the RICE framework quantifies "how many users will experience the impact over a given timeframe". This makes RICE more data-intensive but also more precise for organizations with mature analytics.

In conclusion, it can be said that ICE is faster for rapid triage when you're cutting through dozens of feature requests without detailed metrics. It's less useful when features are interdependent or when you need to balance customer satisfaction against strategic bets. The model treats every feature as independent, which rarely reflects reality in complex product development processes.

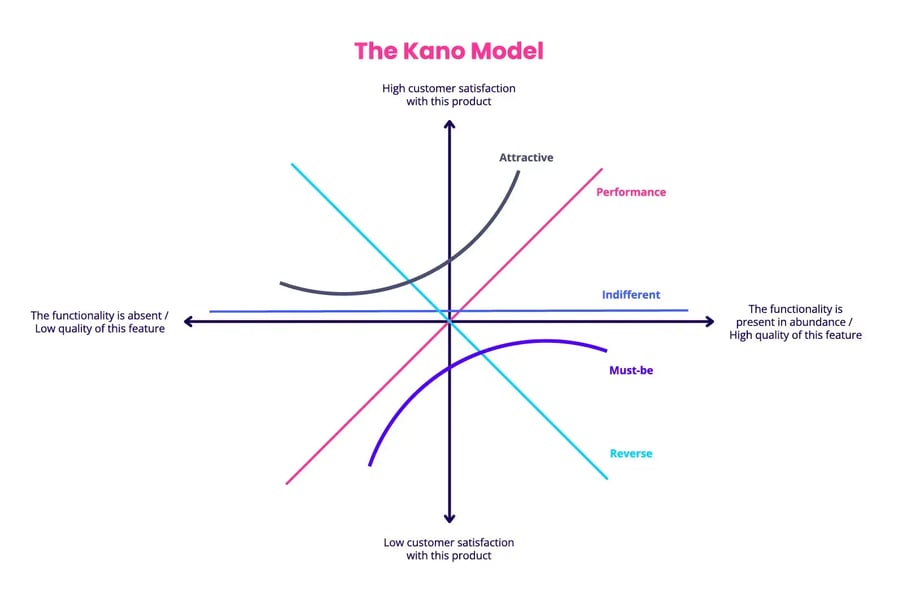

When to use the Kano model for customer-driven choices

The Kano model (Exhibit 3) tries to ask different questions: How does this feature affect user satisfaction? Therefore, it categorizes product features into five types:

-

Basic features. Must-haves that customers expect

-

Performance features. More is better

-

Deligthers. Unexpected value that creates loyalty

-

Indifferent. Customers don’t care

-

Reverse. Features that actually annoy users

Exhibit 3: The Kano model

This framework shines when you’re trying to improve customer satisfaction and need to understand where to invest. The Kano model helps teams identify and prioritize certain features - such as delighters, performance features, and basic features - that have the greatest impact on customer perceptions, leading to more satisfied customers.

Thus, the model requires direct user research as you need customer feedback through surveys or interviews that ask how users would feel if a feature existed versus if it didn’t.

This makes Kano slower than ICE or MoSCoW, but far better at surfacing what customers actually value versus what they say they want. Use it when customer needs are unclear or when you’re entering a new market segment and need to map the satisfaction landscape.

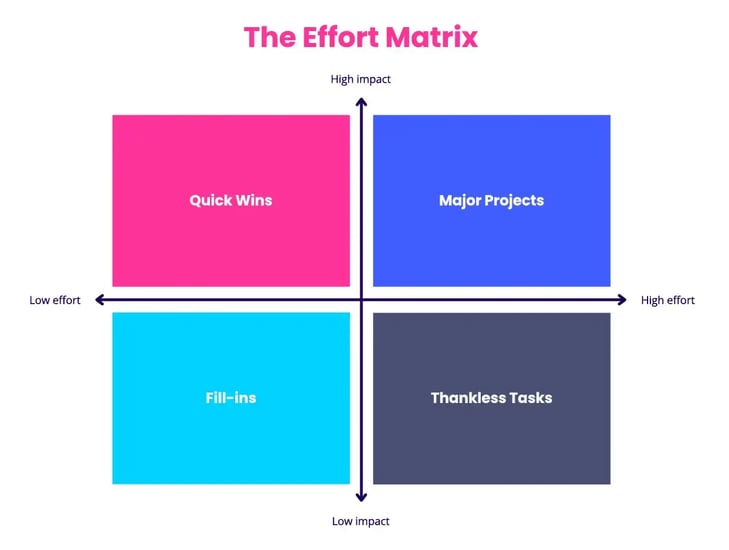

Why effort matrix tools fail without strategic context

The effort matrix (Exhibit 4) - sometimes also called value vs. effort or impact vs. effort- plots features on two axes: business value (or impact) on one side, effort on the other. The resulting quadrants tell you what to prioritize.

Exhibit 4: The effort matrix

It's visually intuitive and great for team alignment: everyone can see why a certain feature ranks where it does. The problem is that "value" and "effort" are dangerously squishy terms. So, without clear prioritization criteria, different teams will score the same feature differently.

Effort matrix tools work only when you've already aligned on what "value" means - revenue, user value, strategic positioning, or something else - and when your effort estimates are grounded in real data. Use the matrix as a communication tool after you've done the hard work of scoring, not as a replacement for rigorous prioritization.

Implementing a company-wide prioritization framework: What matters most?

Company-wide prioritization requires more than a scoring model. Often a framework that works for one product team dies when it is tried to be scaled across the whole organization.

To be successful, it requires shared language, aligned incentives, and a process that survives contact with competing priorities. Involving every team member in the prioritization process is essential to ensure buy-in and a comprehensive evaluation of all perspectives.

But most organizations skip this foundation and wonder why their framework gets ignored six weeks after launch.

Feature prioritization with product management tools

Prioritization fails when it's disconnected from strategy. Every feature decision should trace back to specific business objectives: revenue growth, market expansion, customer retention, or operational efficiency. If you can't draw that line, you're prioritizing in a vacuum.

The best product teams establish clear product management goals at the start of each planning cycle. These aren't vague aspirations like "delight customers.", but measurable targets tied to business outcomes: "Reduce churn by 15%. Enter two new market segments. Cut support tickets related to search functionality by 40%.".

When these goals are explicit, prioritizing features becomes a question of contribution: Which features move which goals, and by how much?

This alignment also prevents the most common prioritization failure: optimizing locally while losing globally. A feature that maximizes value for one product line might conflict with another team's roadmap or drain shared resources. Without company-level goals, every team optimizes for itself. The backlog becomes a collection of locally rational choices that add up to strategic incoherence.

Unified prioritization framework for cross-team use

A unified framework means that every team speaks the same language and evaluates features against the same dimensions. When product teams across different business units all assess impact, effort, and confidence, you can compare priorities across the portfolio. When they don’t, you get teams talking past each other with incompatible scoring systems.

For enterprise-scale prioritization, we recommend the RICE framework (Exhibit 5) as the primary scoring model. And here's why: it balances rigor with practicality, provides numerical comparability across teams, and handles the four factors that matter most - reach, impact, confidence, and effort.

Exhibit 5: The RICE model

Four factors, consistent definitions, a formula that produces comparable scores: (Reach × Impact × Confidence) ÷ Effort. But for each organization, it must be evaluated if the RICE framework is the right fit, as different contexts demand different tools.

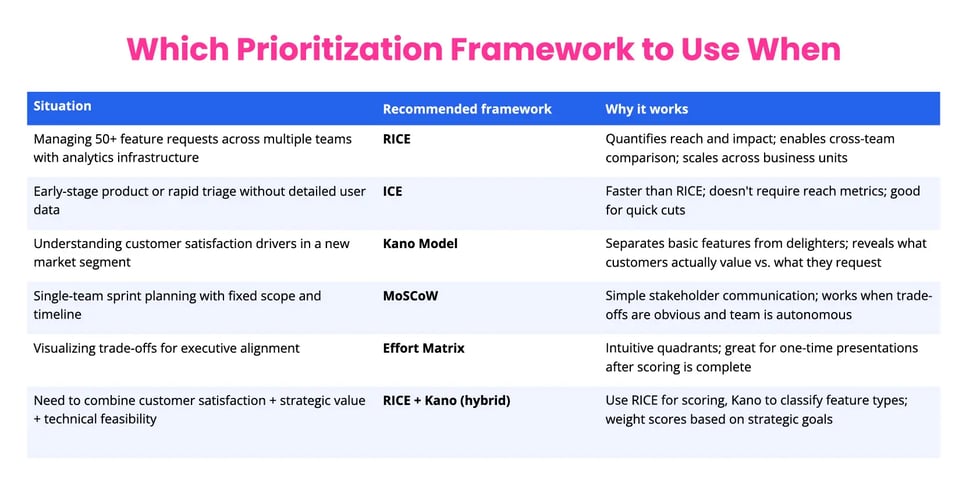

Therefore, use the following decision matrix to choose the right approach:

Exhibit 6: The prioritization framework decision table

To effectively create a cross-team collaboration, governance needs to define how scores translate into roadmap decisions: What's the threshold for a "yes"? How often do you re-score as market conditions shift? What happens when two high-scoring features compete for the same engineering resources? Without these rules, even RICE becomes a suggestion, and suggestions get ignored when politics heat up.

From feature scoring results to roadmap commitments

Scoring features is the easy part, but ultimately, turning scores into a product roadmap is where most frameworks collapse. Because a rank-ordered list of feature ideas isn't a roadmap - it's raw material. You still need to sequence work, manage dependencies, balance quick wins against major projects, and communicate trade-offs to stakeholders who won't like your choices.

The transition from scores to commitments requires three decisions.

-

Set a cut line. Not everything above a certain score gets built - resource availability and team capacity matter. Be explicit about what's in, what's deferred, and what's killed.

-

Cluster features into themes or initiatives. A roadmap built from 47 disconnected features is unshippable. Group related work so teams can build momentum and users see coherent progress.

-

Build in feedback loops. The prioritization process doesn't end when the roadmap is published. Track which features actually improve customer satisfaction or hit business objectives. Update your confidence scores based on what shipped versus what you predicted.

Agile software development thrives on this rhythm: prioritize, build, measure, re-prioritize. Together with story mapping, it can be visualized how features connect to user journeys. Thus, regular reviews with product owners and team members keep priorities aligned as conditions change.

But the ultimate goal is a living system that keeps the backlog clean, the team focused, and stakeholders confident that decisions are grounded in evidence.

How ITONICS supports evidence-based backlog prioritization

Backlog prioritization at scale requires more than discipline: it requires infrastructure. Spreadsheets break when you're managing hundreds of feature requests across different teams with shifting business objectives. Slide decks become obsolete the moment priorities change.

-

One single source of truth. One place where teams easily share what they learned, find what's changing, and what's moving.

-

Score and prioritize objectively. With weighted scoring models, the highest-impact project can be identified and rise to the top (Exhibit 7).

-

Boost the process. Roadmaps provide a high-level overview of goals, initiatives, and deliverables, helping teams to stay aligned with business goals (Exhibit 8).

/Still%20images/Roadmap%20Mockups%202025/portfolio-link-projects-teams-and-milestones-2025.webp?width=2160&height=1350&name=portfolio-link-projects-teams-and-milestones-2025.webp)

Exhibit 8: Roadmaps support to keep the overview for feature prioritizations

For organizations serious about product prioritization frameworks that survive beyond the next reorg, ITONICS provides the structure to make evidence-based decisions repeatable, transparent, and aligned with business value. It won't make the hard trade-offs for you. But it ensures those trade-offs are grounded in data, visible to stakeholders, and adaptable when market conditions shift.

FAQs on backlogs at scale

What is the most effective prioritization framework for large organizations?

There is no single best framework. Large organizations need a system that combines multiple methods. ICE can cut through noise fast. RICE and effort matrix tools help compare value against cost. Kano adds insight into customer satisfaction. The real power comes from using these techniques together under one unified prioritization framework.

How often should product teams rescore features in the product backlog?

Rescore when strategy shifts, new customer feedback arrives, or effort estimates change. Quarterly cycles work for most organizations, but teams with high product velocity may update scores monthly. The goal is not constant rescoring but maintaining a backlog that reflects current priorities.

How do frameworks like MoSCoW or Kano help improve customer satisfaction?

MoSCoW helps teams identify must-have features, but it loses accuracy at scale. Kano reveals which product features drive satisfaction by distinguishing basics from delighters. Used together, they help teams avoid over-investing in “could have” items and focus on the features users actually value.

When should teams use the ICE scoring model instead of more complex techniques?

Use ICE when you need rapid comparison across many product features and only have limited data. It works well early in discovery or when the backlog is bloated. It becomes less helpful when dependencies matter or when customer satisfaction needs deeper analysis with models like Kano.

Why do effort matrix tools fail without strategic alignment?

Impact and effort sound simple, but teams interpret them differently unless value, risk, and cost are defined clearly. Without shared definitions and a clear prioritization framework, an effort matrix becomes a subjective debate. It works only when it sits on top of structured feature scoring with agreed criteria.