Rework consumes 30 to 50 percent of development activity across most product organizations. This statistic originates from research on software teams, but the pattern holds across hardware, medical devices, and industrial products.

The cost is staggering. A six-month delay from late-stage changes erodes 33 percent of lifetime profitability. Most teams accept this as inevitable friction in the product development process.

It's not inevitable. The majority of rework originates in the discovery process, the phase where teams define problems, validate assumptions, and test ideas before committing resources. This blog outlines seven product discovery techniques that cut rework in half by catching errors when they cost the least to fix.

The hidden economics of product discovery decisions

Most organizations track research and development spending by phase. They measure development costs, testing budgets, and time to market. Few measure the cost of decisions made before development begins.

Discovery decisions carry asymmetric risk. A flawed assumption validated in week two costs hours to correct. The same assumption discovered in month eight costs weeks and triggers cascading changes across delivery teams.

The economics are clear: early detection prevents expensive downstream correction.

Why 50% of development rework starts in the product discovery process

Rework originates when teams build solutions before understanding customer needs. They skip assumption testing, rely on customer feedback without validation, or move into the development phase with unresolved ambiguity about user needs or market fit.

Research shows that requirements defects cost 10 to 100 times more to fix after release than during discovery. When teams rush through product discovery phases, they transfer uncertainty into development. Development teams then discover gaps through failed tests, user complaints, or missed business goals.

The pattern repeats across industries. Teams under pressure to show progress skip structured discovery and pay the price in extended timelines and additional development cycles.

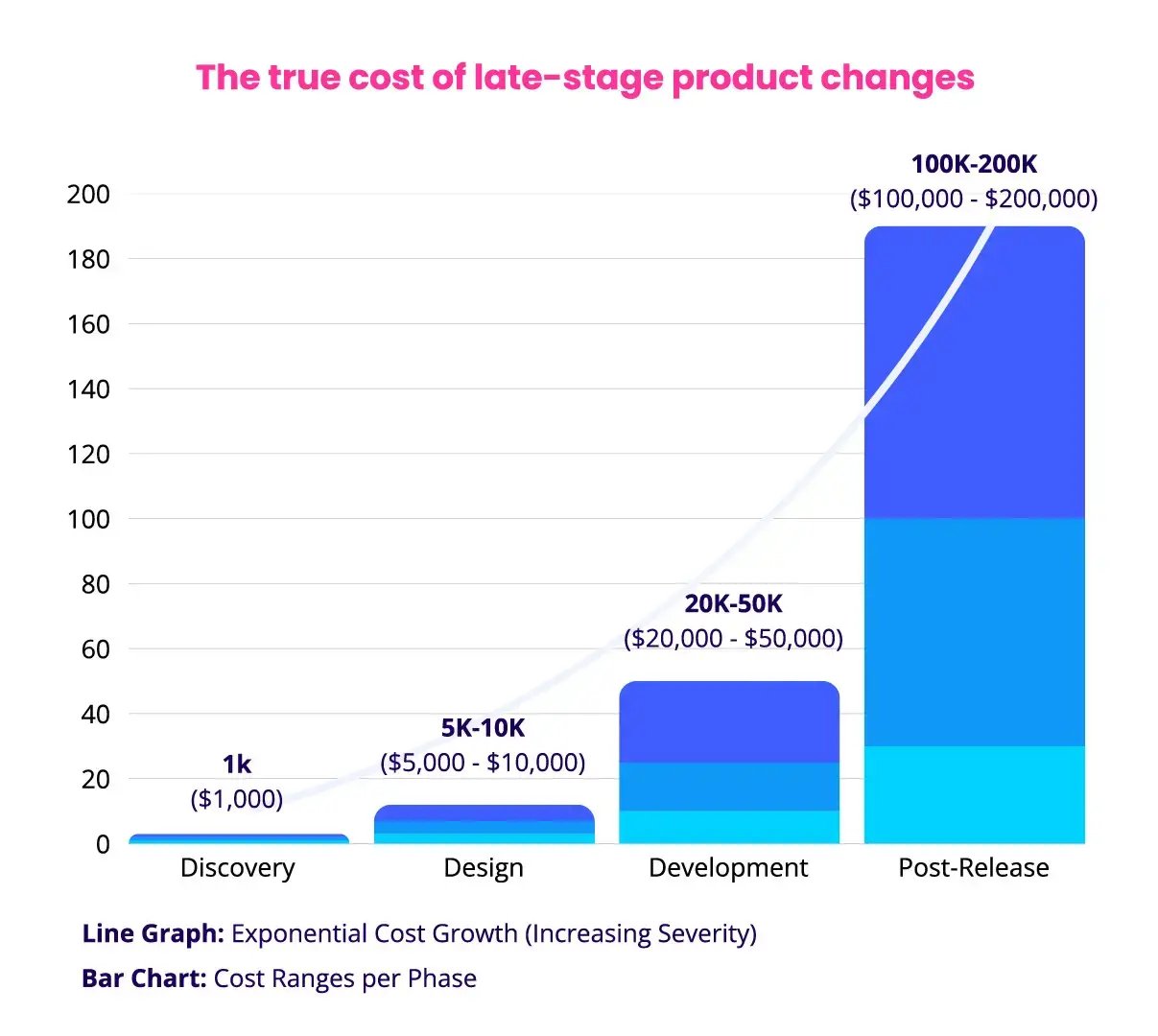

The compounding cost of late-stage pivots (with benchmark data)

Late-stage changes compound in ways that early adjustments do not. A pivot in discovery affects one or two people for a few days. A pivot during development affects entire delivery teams, delays adjacent projects, and forces rework across documentation, testing, and integration.

Benchmark data shows the escalation. Changing a requirement during discovery costs one unit of effort. The same change during design costs 5 to 10 units. During development, it costs 20 to 50 units. After release, it costs 100 to 200 units.

Translated to dollars: if one unit equals $1,000 in labor and overhead, a discovery-phase fix costs $1,000. That same fix costs $5,000 to $10,000 in design, $20,000 to $50,000 during development, and $100,000 to $200,000 post-release.

The real cost extends beyond immediate rework. Late pivots erode team confidence, create technical debt, and consume budget reserved for innovation. Organizations that accept high rework rates stay reactive rather than stay competitive.

Exhibit 1: Cost of fixing requirements defects by development phase showing exponential increase

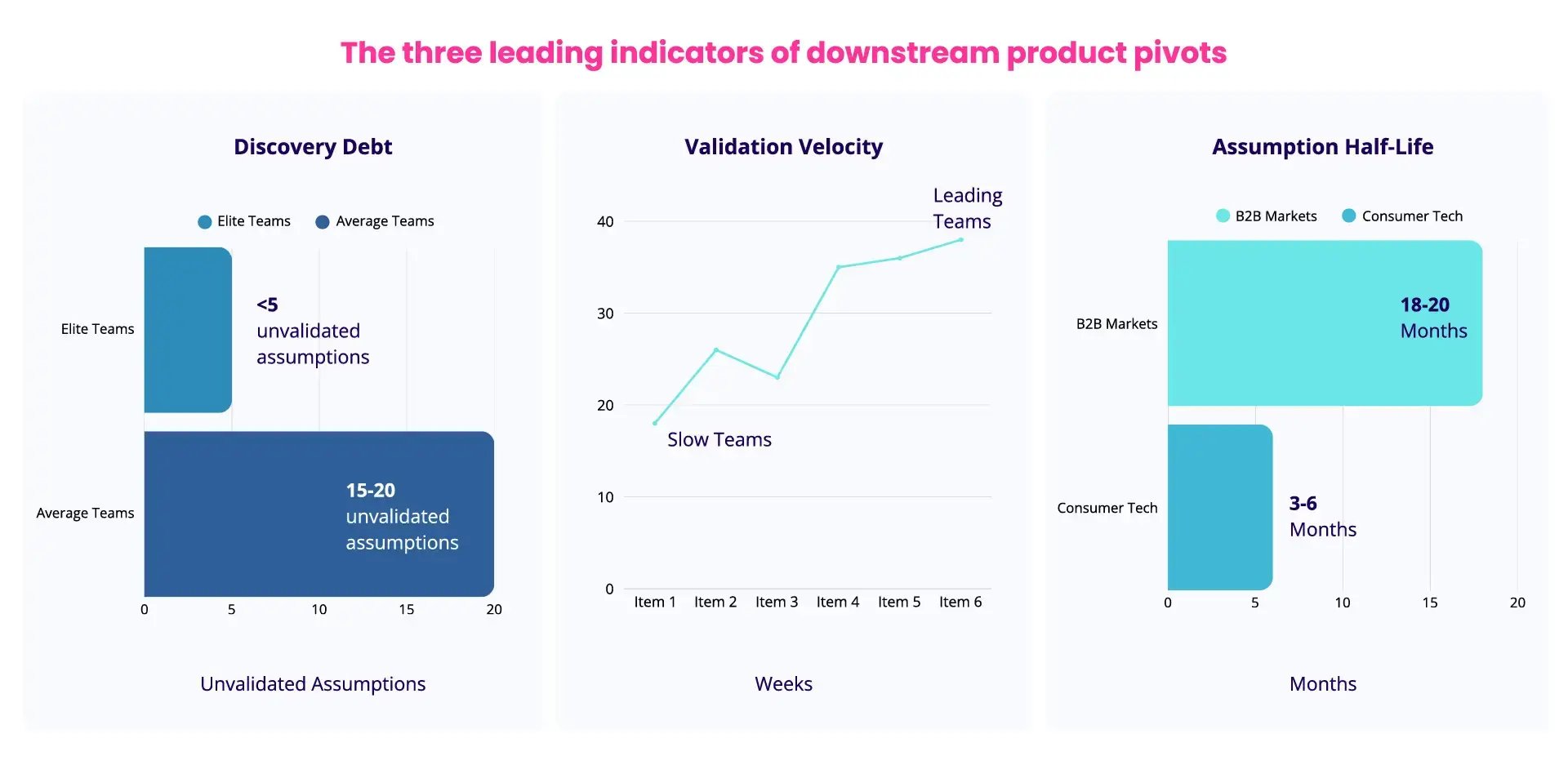

Key metrics: discovery debt, validation velocity, assumption half-life

Three key metrics quantify discovery effectiveness and predict downstream rework.

-

Discovery debt

Discovery debt measures unvalidated assumptions carried into development. High discovery debt signals rushed discovery processes and predicts future pivots. Teams that track this metric intervene before problems cascade.

Discovery debt can be calculated by counting critical assumptions entering the development phase minus those with documented validation. As a benchmark, high performers carry fewer than 5 unvalidated assumptions per major feature. Average teams carry 15 to 20.

-

Validation velocity

Validation velocity tracks how quickly teams test and resolve critical uncertainties. Fast validation enables teams to fail early, pivot cheaply, and commit resources with confidence.

Validation velocity can be calculated by dividing the critical assumptions validated by the time period, measured per sprint or week. As a benchmark, leading product teams validate 3 to 5 assumptions weekly through user research, prototyping, or market research. Slow teams validate 1 or fewer.

-

Assumption half-life

Assumption half-life measures how long validated insights remain reliable in changing markets. Short half-lives require continuous validation loops. Long half-lives justify deeper upfront research.

Assumption half-life can be calculated by tracking when past validations became obsolete or required retesting, then calculating the median time to obsolescence. As a benchmark, stable B2B markets average 12 to 18 months. Consumer tech drops to 3 to 6 months.

Exhibit 2: Product discovery metrics dashboard illustrating best practice benchmarks

Why most product discovery efforts fail

Most teams conduct discovery. Few conduct it well. Organizations treat discovery as a checklist rather than a learning system. Product teams gather data but skip synthesis. Development teams validate ideas but ignore contradictory signals.

The result is discovery theater: activity that looks rigorous but fails to reduce uncertainty.

Five common mistakes that guarantee rework

These mistakes appear across industries and organization sizes. Each one undermines discovery effectiveness and drives rework into later phases.

1. Confusing customer feedback with validated learning

Customers articulate wants but rarely reveal underlying pain points. Product teams that build directly from feedback requests create features users ask for but don't use. Validation requires observing behavior, not just collecting opinions.

2. Validating solutions before validating problems

Teams prototype features and test usability while the core problem remains unproven. This inverts the discovery process and commits resources to solving challenges that may not matter to the target audience.

3. Running discovery in isolation from delivery teams

Product managers conduct research, then hand specifications to engineers who lack context. The handoff creates information loss and eliminates the shared understanding that prevents misinterpretation during development.

4. Mistaking consensus for validation

Teams seek stakeholder alignment rather than market evidence. Internal agreement feels productive but offers no protection against market rejection.

5. Stopping discovery when development starts

Organizations treat discovery as a phase rather than a continuous discipline. Markets shift, assumptions decay, and product teams build toward outdated targets while competitors adapt to changing market conditions.

Exhibit 3: Five common product discovery mistakes that cause development rework

How elite development teams structure product discovery differently

Elite teams embed discovery throughout the product development process rather than isolating it upfront. They maintain small, cross-functional squads that follow Jeff Bezos's two-pizza rule: small enough that two pizzas can feed the team. These squads include engineering, design, and product management from day one. In larger organizations, total project membership may be higher, but core discovery squads remain tight and focused.

These teams operate with explicit hypotheses rather than feature requests. Every initiative starts with a testable statement: "Sales teams will adopt this workflow if it reduces proposal time by 30 percent." Success criteria and kill criteria are defined before any product components are designed or developed.

Elite teams separate learning budgets from delivery budgets. They allocate 15 to 25 percent of sprint capacity specifically to research activities: user testing, prototype validation, market research, and assumption testing. This capacity is protected from feature pressure.

The ROI of discovery: spending more to ship faster

Organizations that invest more in discovery ship faster, not slower. Teams spending less than 10 percent of capacity on discovery average 18 to 24 months from concept to launch. Teams investing 15 to 25 percent average 12 to 15 months.

For a product team with $5 million in annual development spending, this translates to $1.5 million to $2.5 million in avoided costs. The return on discovery investment ranges from 3:1 to 5:1. Organizations that treat discovery as overhead rather than insurance pay 2 to 3 times more to reach the same outcomes.

Product discovery phases: reducing risk before development

Risk compounds as products move toward launch. A flawed assumption in month one becomes a failed feature in month six and a costly pivot in month twelve. The further a product progresses before catching errors, the more expensive those errors become to fix.

Structured discovery phases reduce this risk by creating deliberate checkpoints before development begins. Instead of treating discovery as a single gate, effective teams break it into distinct phases, each designed to retire specific uncertainties.

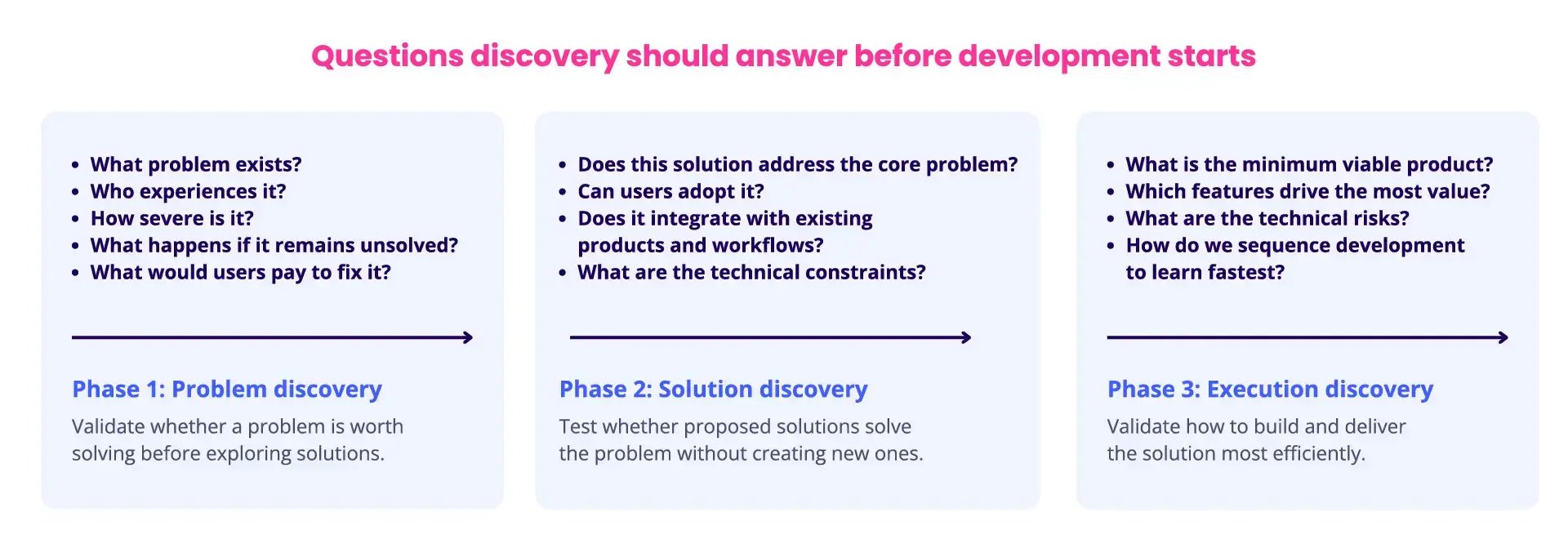

The three discovery phases (problem, solution, execution)

Each phase addresses specific questions and requires different validation methods.

Phase 1: Problem discovery

This phase validates whether a problem is worth solving. Teams investigate customer needs, pain points, and the economic value of solving specific challenges. The goal is to gain a clear understanding of the problem space before generating solutions.

Key questions this phase seeks to explore and answer:

-

-

What problem exists?

-

Who experiences it?

-

How severe is it?

-

What happens if it remains unsolved?

-

What would users pay to fix it?

-

Phase 2: Solution discovery

This validation phase tests whether a proposed solution solves the validated problem. Teams prototype concepts, test usability, and verify that the solution delivers desired outcomes without creating new problems.

Key questions this phase seeks to explore and answer:

-

-

Does this solution address the core problem?

-

Can users adopt it?

-

Does it integrate with existing products and workflows?

-

What are the technical constraints?

-

Phase 3: Execution discovery

This phase validates how to build and deliver the solution efficiently. Teams resolve technical uncertainties, assess feasibility, and identify the most valuable features to build first.

Key questions this phase seeks to explore and answer:

-

-

What is the minimum viable product?

-

Which features drive the most value?

-

What are the technical risks?

-

How do we sequence development to learn fastest?

-

Exhibit 4: Three-phase product discovery framework: problem, solution, and execution discovery

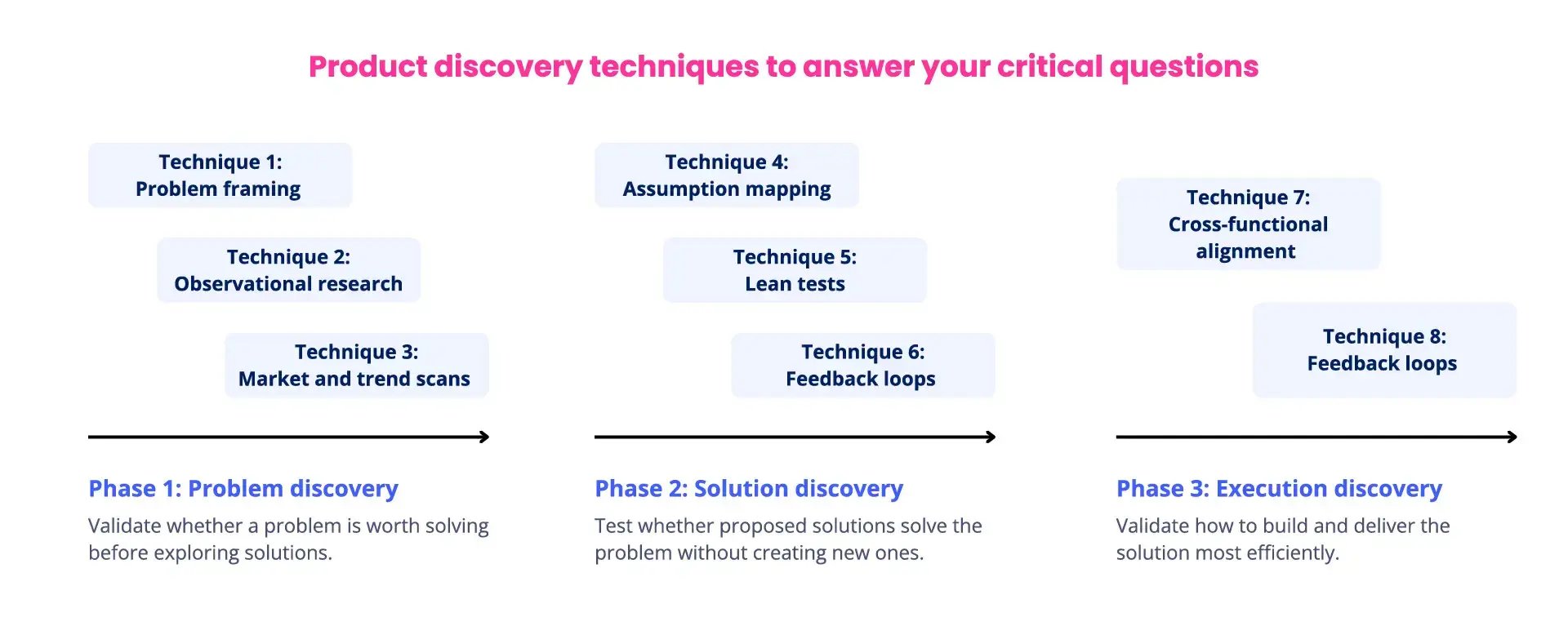

How techniques map to your product development process

Different discovery techniques work best at different phases. Selecting the right technique at the right time prevents wasted effort and accelerates learning.

Problem discovery techniques:

-

-

Problem framing (Technique 1) clarifies what problem deserves attention

-

Observational research (Technique 2) reveals unspoken user needs

-

Market and trend scans (Technique 6) identify emerging opportunities

-

Solution discovery techniques:

-

-

Assumption mapping (Technique 3) exposes high-risk unknowns

-

Lean tests (Technique 4) validate ideas without overbuilding

-

Feedback loops (Technique 5) calibrate solutions to real demand

-

Execution discovery techniques:

-

-

Cross-functional alignment (Technique 7) prevents handoff failures

-

Feedback loops (Technique 5) continue throughout development

-

The critical skill is matching technique intensity to uncertainty level. High uncertainty demands rigorous validation using multiple techniques. Low uncertainty allows lighter application and faster progression.

Exhibit 5: The 7 product development techniques mapped across three discovery phases

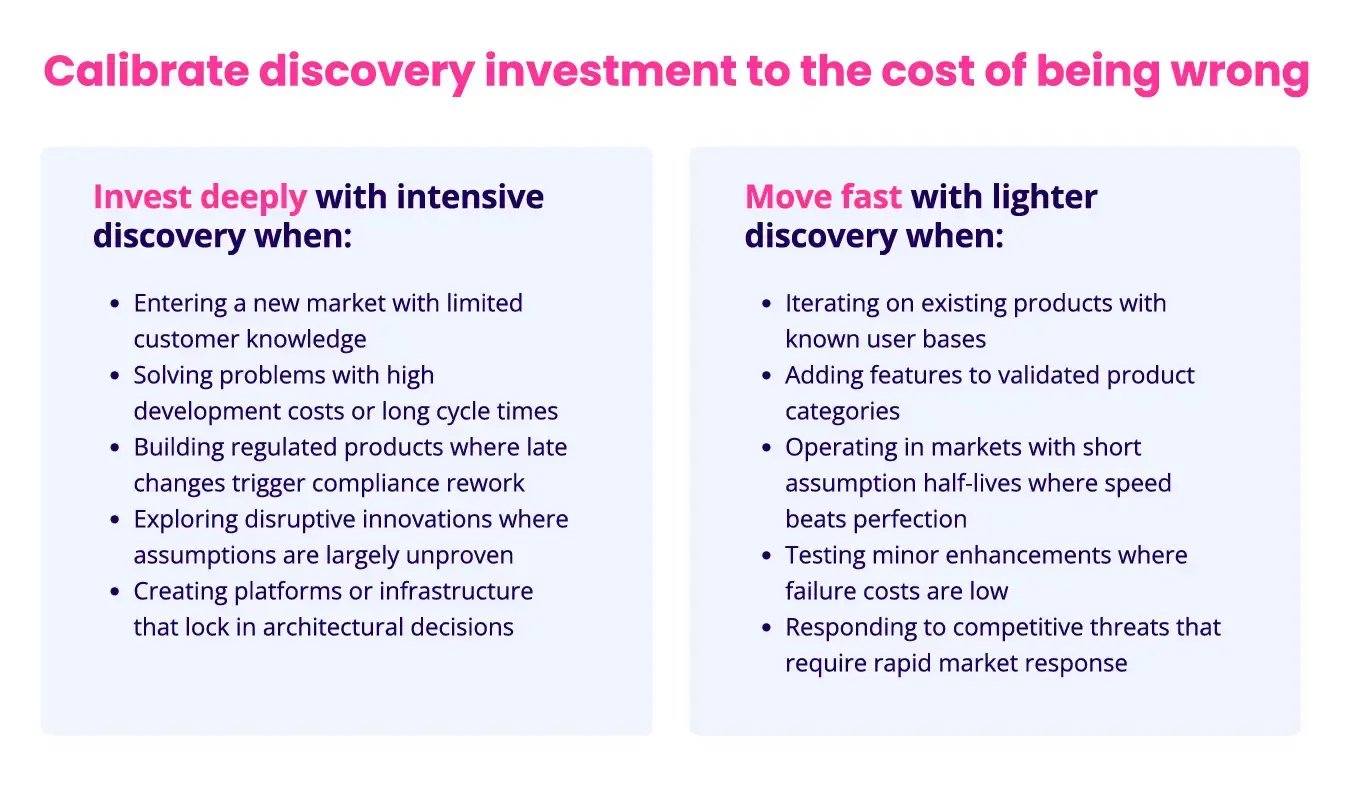

When to invest deeply in discovery vs. move fast

Not every discovery effort requires the same depth. Teams must calibrate their investment in discovery techniques based on risk and novelty.

Invest deeply in discovery techniques when:

-

-

Entering a new market with limited customer knowledge

-

Solving problems with high development costs or long cycle times

-

Building regulated products where late changes trigger compliance rework

-

Exploring disruptive innovations where assumptions are largely unproven

-

Creating platforms or infrastructure that lock in architectural decisions

-

Move fast with lighter discovery when:

-

-

Iterating on existing products with known user bases

-

Adding features to validated product categories

-

Operating in markets with short assumption half-lives where speed beats perfection

-

Testing minor enhancements where failure costs are low

-

Responding to competitive threats that require rapid market response

-

The goal is proportional investment: match discovery rigor to the cost of being wrong.

Exhibit 6: Decision framework for calibrating product discovery investment based on risk

Seven product discovery techniques that reduce costly rework

These seven techniques address the most common sources of rework in product development. Each technique targets a specific type of uncertainty. Applied together across the three discovery phases, they create a structured approach to validating assumptions before committing development resources.

Technique 1: Problem framing that sharpens product management decisions

Problem framing defines the boundaries, constraints, and success criteria for a problem before exploring solutions. It transforms vague opportunities into specific, testable hypotheses about customer needs and business value.

Most product failures stem from solving the wrong problem. Research shows that 40 to 50 percent of development effort goes to product features that customers rarely or never use. Problem framing prevents this waste by forcing clarity on what problem exists, who experiences it, and what success looks like.

Best practice involves articulating the problem as a falsifiable statement. Instead of "customers need better reporting," elite teams frame it as "finance managers spend 8+ hours monthly on manual report assembly, costing $50,000 annually per organization."

Apply problem framing by documenting five elements:

-

-

The problem statement,

-

Affected user segments,

-

Current workarounds and their costs,

-

Success metrics, and

-

Conditions that would invalidate the problem.

-

Product managers who invest 2 to 3 days in rigorous problem framing reduce downstream scope changes by 60 percent.

Exhibit 7: Problem framing template for product discovery with five key elements

Technique 2: Observational research beyond customer feedback

Observational research captures what users actually do rather than what they say they do. It involves watching users in their natural environment, documenting workflows, and identifying pain points that users have normalized or cannot articulate.

Customer feedback reveals stated preferences. Observation reveals actual behavior. Studies show a 30 to 40 percent gap between what users report in surveys and what they do in practice. This gap explains why products built from feedback alone often fail adoption tests.

Best practice combines contextual inquiry with task analysis. Teams observe users completing real tasks in their work environment. User interviews and focus groups complement observation by exploring intent. Sessions last 60 to 90 minutes and involve 8 to 12 participants to reach pattern saturation.

Apply observational research early in problem discovery. Schedule sessions before forming solutions. Document direct quotes, user workarounds, and moments of friction. Synthesize findings into behavioral insights that provide valuable insights rather than feature requests. Teams that conduct observational research before ideation reduce post-launch feature revisions by 45 percent.

Technique 3: Assumption mapping to target high-risk unknowns

Assumption mapping identifies and prioritizes the critical uncertainties that could invalidate a product strategy. It transforms implicit assumptions into explicit hypotheses, then sequences validation work based on risk and dependency.

Every product initiative carries dozens of assumptions about users, markets, technology, and business models. Research on failed products shows that 70 percent of failures trace to unvalidated assumptions that teams treated as facts. Assumption mapping surfaces these potential risks before they become expensive mistakes.

Best practice involves listing all assumptions, then scoring each on two dimensions:

-

-

Importance to success, and

-

Level of evidence.

-

High-importance, low-evidence assumptions become validation priorities. Teams map dependencies to identify which assumptions must be validated first.

Apply assumption mapping at the start of solution discovery. Gather cross-functional teams to surface assumptions from multiple perspectives. Engineers identify technical assumptions. Product managers surface market assumptions. Designers reveal usability assumptions. Document 20 to 40 assumptions per initiative, then test assumptions for the top 5 to 8 highest-risk items before committing to development. Organizations using assumption mapping reduce mid-project pivots by 50 percent.

Technique 4: Lean tests that validate ideas without overbuilding

Lean tests validate critical assumptions using minimum viable experiments rather than full product builds. They answer specific questions with the least investment possible, enabling rapid learning cycles before committing significant development resources.

Organizations waste development capacity building features to test assumptions that could be validated with prototypes, landing pages, or concierge services. Data shows that teams can validate 80 percent of critical assumptions using experiments that cost 5 to 10 percent of full development.

Best practice involves designing tests that isolate single variables. A landing page tests demand. A clickable prototype tests workflow logic. A manual concierge service tests the value proposition before automation.

Apply lean tests by defining the assumption, the evidence needed to validate it, and the minimum experiment required. Run tests with 20 to 50 users for qualitative insights or 200+ for quantitative insights. Set clear success criteria before running the test. Teams that adopt lean testing validate assumptions 5 to 8 times faster than those building full prototypes.

Technique 5: Feedback loops that align development teams with demand

Feedback loops create systematic mechanisms for collecting, analyzing, and acting on signals from users, markets, and internal teams. They ensure product teams remain calibrated to real customer demands throughout the product life cycle rather than building toward outdated assumptions.

Products fail when teams lose touch with evolving customer needs and market trends. Research shows that assumption half-life in fast-moving markets is 3 to 6 months. Without continuous user feedback, teams build products for yesterday's problems.

Best practice establishes multiple feedback mechanisms operating at different frequencies. Weekly user testing sessions validate tactical decisions. Monthly trend analysis identifies shifting demands. Quarterly business reviews assess product-market fit.

Apply feedback loops by implementing instrumentation early. Add analytics to prototypes and minimum viable products to track actual usage patterns. Schedule recurring user research sessions rather than one-time studies. Organizations with mature feedback loops reduce feature abandonment rates by 35 percent and increase customer retention by 20 to 30 percent.

Technique 6: Market scans that bridge basic research and applied research

Market scans systematically monitor industry trends, competitive movements, emerging technologies, and regulatory changes that could impact product strategy. They connect scientific research and technology advances to practical product opportunities.

Organizations that ignore market signals miss opportunities and get blindsided by disruption. Studies show that 60 percent of product pivots result from market shifts that were visible 12 to 18 months earlier. Market scans provide early warning systems that enable proactive product strategy adjustment.

Best practice involves structured environmental scanning across six dimensions:

-

-

Competitive activity,

-

Technology evolution,

-

Regulatory changes,

-

Customer behavior shifts,

-

Economic trends, and

-

Adjacent market innovations.

-

The challenge is volume and velocity. Manual scanning cannot keep pace or sift through noise effectively. Leading organizations use platforms such as ITONICS that aggregate signals using AI, centralize data from multiple sources, and enable teams to find consensus on priorities.

Apply market scans by establishing a research cadence aligned with your industry's rate of change. Fast-moving tech sectors require monthly scans. Industrial products may need quarterly reviews. Teams that conduct systematic market scans identify strategic opportunities 9 to 12 months earlier than competitors.

Exhibit 8: Automated ITONICS radar tracking and real-time alerts for strategic market analysis

Technique 7: Cross-functional alignment that strengthens product delivery

Cross-functional alignment ensures that engineering, design, product management, and business stakeholders develop a shared understanding of problems, solutions, and priorities. It eliminates the information loss and misalignment that occurs when discovery happens in silos.

Handoff failures between functions account for 25 to 35 percent of development rework. When product managers conduct discovery alone, critical technical constraints and design implications emerge late in development, forcing expensive changes.

Best practice involves including representatives from all delivery functions in discovery activities from the start. Engineers participate in user research to understand technical implications. Designers join assumption mapping to identify usability risks. Business stakeholders contribute to problem framing to ensure commercial viability.

Apply cross-functional alignment by restructuring discovery as a team sport rather than a product management solo activity. Create standing discovery squads of 5 to 8 people with diverse functional expertise. Use shared artifacts like assumption maps, user journey maps, and test results that all functions contribute to and reference. Organizations with strong cross-functional alignment reduce late-stage requirement changes by 55 percent.

Embedding discovery into your product development process

Discovery techniques deliver value only when integrated into existing workflows. Treating discovery as a separate workstream creates friction and delays. The goal is to embed discovery into the product development process so it feels like a natural practice rather than additional overhead.

Successful integration requires three elements: clear phase structures that protect learning time, explicit links between discovery outputs and product decisions, and traceability systems that connect early insights to final product delivery.

Structuring product discovery phases without slowing delivery

The concern is valid: adding discovery phases sounds like adding time and cost. In practice, structured discovery accelerates delivery by eliminating expensive rework cycles.

Structure discovery using time-boxed phases with clear exit criteria. Problem discovery gets 2 to 3 weeks. Solution discovery gets 3 to 4 weeks. Execution discovery runs 1 to 2 weeks. These are maximum durations, not targets. Teams exit phases early when validation thresholds are met.

Run discovery in parallel with development where possible. While one team builds validated features, another team conducts discovery on the next initiative. This pipeline approach maintains continuous delivery while ensuring rigorous discovery. The key is phase gating. No feature enters development until its discovery phase completes and passes exit criteria.

Linking discovery outputs to product management decisions

Discovery generates insights. Product management requires decisions. The gap between insight and decision causes most implementation failures. Bridge this gap by defining explicit decision points where discovery outputs trigger specific actions.

Map discovery techniques to decision types. Problem framing outputs inform go/no-go decisions on whether to pursue an opportunity. Assumption mapping results determine what to validate next. Lean test results trigger build, iterate, or kill decisions.

Create standard artifacts that translate insights into decisions. A problem definition document includes the validated problem statement, affected segments, economic impact, and recommended next steps. An assumption validation report lists tested hypotheses, evidence gathered, and resulting product implications.

Establish decision authority clearly. Product managers own problem prioritization. Cross-functional squads own solution validation. Engineering leads own technical feasibility. Clear authority prevents discovery from devolving into consensus-seeking exercises.

Maintaining traceability from early insight to product delivery

Traceability connects early discovery insights to features shipped months later. Without it, teams lose context, repeat validation work, and make decisions based on outdated assumptions.

Build traceability into discovery artifacts from the start. Every assumption tested should link to the problem it addresses. Every lean test should connect to the assumptions it validates. Every feature in the product roadmap should trace back to validated insights.

Maintain a central repository where discovery outputs live: problem definitions, assumption maps, test results, user research findings, and market scan insights. Platforms like ITONICS enable this by connecting insights across phases, linking assumptions to validation evidence, and maintaining version history as insights evolve.

Tag insights with metadata: discovery phase, validation date, affected user segments, related assumptions, and confidence level. This enables quick retrieval when new questions emerge or when assumption half-life expires.

/Still%20images/Workflow%20Builder%20Mockups%202025/portfolio-audit-trails-and-alerts-2025.webp?width=2160&height=1350&name=portfolio-audit-trails-and-alerts-2025.webp)

Exhibit 9: The ITONICS platform showing traceability from discovery insights to product delivery

Measuring product discovery ROI and tracking improvement

Discovery investment requires justification. Executive stakeholders need evidence that discovery reduces rework and accelerates delivery. Without measurement, discovery remains a discretionary expense vulnerable to budget cuts.

Effective measurement tracks three layers: leading indicators that signal discovery health, discovery debt calculations that quantify risk, and ROI metrics that prove business value.

Leading indicators that your discovery process is working

Leading indicators predict future performance before lagging metrics shift. They enable course correction during discovery rather than after costly mistakes emerge.

1. Validation velocity

Track how many assumptions teams validate weekly. Teams validating 3 to 5 assumptions weekly maintain momentum and reduce discovery debt. Below 1 per week signals stalled discovery.

2. Assumption coverage

Calculate the percentage of critical assumptions with documented validation before entering development. Target 90 percent coverage. Below 70 percent predicts mid-project pivots.

3. Discovery cycle time

Track days from problem identification to a validated solution ready for development. Elite teams average 6 to 8 weeks. Cycles extending beyond 12 weeks indicate process inefficiencies.

How to calculate and reduce your discovery debt

Discovery debt quantifies unvalidated assumptions carried into development. High debt predicts rework. Low debt enables confident execution.

Calculate discovery debt by listing all critical assumptions for each initiative entering development. Score each assumption on validation confidence: validated with strong evidence, partially validated with weak evidence, or unvalidated. Count unvalidated and partially validated assumptions as debt.

Benchmark your debt against targets. Elite teams carry fewer than 5 unvalidated assumptions per major feature. Average teams carry 15 to 20. Initiatives with more than 10 unvalidated assumptions should not enter development without additional discovery investment.

Reduce discovery debt through systematic assumption mapping and validation. Prioritize high-impact, low-evidence assumptions first. Use lean tests to validate quickly. Track debt trends quarterly to measure success.

Building the business case for sustained discovery investment

Sustained discovery investment requires demonstrating ROI that justifies the 15 to 25 percent capacity allocation. Build the business case using three evidence types: avoided costs, accelerated delivery, and improved outcomes.

Calculate avoided rework costs by comparing current rework rates against baseline. If discovery techniques reduce rework from 40 percent to 20 percent of development capacity, calculate the dollar savings based on annual development spending. For a $10 million development budget, this saves $2 million annually.

Measure delivery acceleration by tracking time from concept to launch before and after implementing structured discovery. Document the 6- to 9-month improvement that high-investment teams achieve and translate this to gaining a competitive edge and revenue acceleration.

Quantify improved outcomes through product success metrics: user adoption rates, customer retention, and revenue per feature. Products built with rigorous discovery show 25 to 35 percent higher user satisfaction and 20 to 30 percent better retention.

Present this business case as a three-year investment with compounding returns. Year one focuses on building discovery capability. Year two captures initial ROI through reduced rework. Year three delivers sustained competitive advantage through faster, higher-quality product delivery.

The seven techniques outlined in this blog provide the operational foundation for this transformation. Applied systematically across the three discovery phases, they cut rework in half, accelerate delivery, and shift development capacity from fixing mistakes to building competitive differentiation.

FAQs on product discovery techniques

What is the difference between product discovery and product development?

Product discovery validates problems before committing resources. Product development builds the validated solution. Discovery answers "Should we build this?" Development answers "How do we build this?"

The distinction centers on risk and cost. Discovery uses low-cost experiments: prototypes, user interviews, lean tests. Development deploys full engineering resources, quality assurance, and production systems.

Teams that skip discovery transfer uncertainty into expensive build cycles. Research shows this costs 10 to 100 times more than early validation. Effective organizations run discovery continuously alongside development, maintaining separate budgets for learning versus delivery.

How long should product discovery take before starting development?

Discovery duration depends on uncertainty, market complexity, and the cost of being wrong. Most initiatives need 6 to 12 weeks across three phases. Problem discovery takes 2 to 3 weeks. Solution discovery takes 3 to 4 weeks. Execution discovery takes 1 to 2 weeks.

These are maximum durations, not fixed timelines. Teams exit phases early when validation thresholds are met.

High-risk initiatives justify deeper investment. Entering new markets or building regulated products may need 12 to 16 weeks. Low-risk efforts like iterating validated products may need only 3 to 4 weeks.

The counterintuitive result: organizations that invest 15 to 25 percent of their capacity in discovery ship faster. They average 12 to 15 months from concept to launch. Teams spending less than 10 percent average 18 to 24 months. Discovery eliminates expensive rework cycles that slow delivery.

What is discovery debt and why does it matter?

Discovery debt measures unvalidated assumptions carried into development. It quantifies the risk teams accept when building before validating critical uncertainties. High discovery debt predicts mid-project pivots, scope changes, and rework.

Calculate it by listing critical assumptions for each initiative entering development. Count those without documented validation evidence. Elite teams carry fewer than 5 unvalidated assumptions per major feature. Average teams carry 15 to 20.

Initiatives with more than 10 unvalidated assumptions should not enter development. Research shows that 70 percent of product failures can be traced to unvalidated assumptions that teams treated as facts.

The economics are stark. Each unvalidated assumption represents potential rework. Fixes cost 20 to 200 times more during or after development than during discovery. Tracking discovery debt transforms hidden risk into a manageable metric.

Can product discovery work in agile or lean development environments?

Product discovery integrates naturally with agile and lean methodologies. All three emphasize learning, iteration, and customer validation. Discovery complements rather than conflicts with these approaches.

The key is embedding discovery into sprint cycles. High-performing agile teams allocate 15 to 25 percent of sprint capacity to discovery activities. User testing, assumption validation, prototyping, and market research run parallel to development work.

Discovery activities fit within agile ceremonies. Problem framing informs backlog prioritization. Assumption mapping happens during sprint planning. Lean tests run as spike stories. Feedback loops operate through sprint reviews and retrospectives.

The agile principle of responding to change requires continuous discovery. Markets shift every 3 to 6 months in fast-moving sectors. Teams that stop discovery when development starts build toward outdated assumptions while competitors adapt.

What capabilities should teams look for in product discovery software?

Effective discovery requires centralized platforms that maintain traceability from early research to final delivery. The critical capabilities separate functional tools from strategic systems.

First, look for end-to-end integration. The platform should connect market scans, trend analysis, assumption tracking, and validation evidence in one system. Scattered tools create information loss and force teams to manually reconcile insights across multiple sources.

Second, prioritize intelligent aggregation. Platforms like ITONICS use AI to aggregate signals, filter noise, and surface relevant patterns. Manual scanning cannot keep pace with the volume and velocity of market signals.

Third, ensure robust traceability features. Link assumptions to validation tests. Connect early insights to features shipped months later. Tag insights with metadata: discovery phase, validation date, and confidence level.

Without these capabilities, teams lose context and repeat validation work. Critical insights scatter across documents. Decisions rest on outdated assumptions no one can trace.